In recent years, artificial intelligence (AI) has advanced rapidly, leading to the rise of deepfake technology—highly realistic synthetic audio and video content. While this innovation has promising applications across various industries, it has also been exploited by scammers to carry out sophisticated frauds against individuals and businesses.

This blog delves into how AI-powered deepfake scams work, explores notable real-world cases, and offers practical strategies to protect yourself and your business from these emerging threats.

What Are Deepfakes?

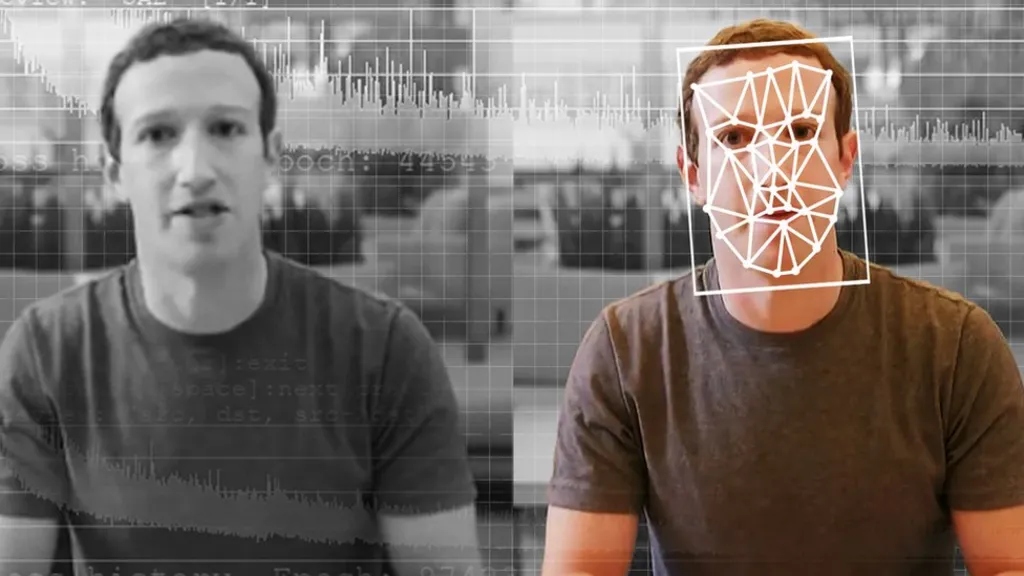

Deepfakes are a type of synthetic media where artificial intelligence is used to swap a person's face, voice, or both with someone else's. The term "deepfake" comes from "deep learning" and "fake," highlighting the technology behind these hyper-realistic manipulations. These AI-generated imitations can be so convincing that it's often difficult for the average person to tell what's real and what's not.

Originally, deepfakes gained attention for their misuse—particularly in creating fake explicit videos of celebrities and spreading misinformation. As the technology has advanced and become more accessible, its applications have expanded, but so have its risks. While deepfakes have legitimate uses in entertainment, education, and even film production, they are also being exploited for fraud, political manipulation, and identity theft.

How AI-Generated Deepfake Scams Work

Scammers are getting more sophisticated with deepfake technology, using AI to trick people in different ways:

Voice Cloning: With just a few seconds of someone’s recorded voice—sometimes as little as three seconds—fraudsters can use AI to create an incredibly realistic replica. They then use this cloned voice to make phone calls or send voice messages that seem to come from a trusted person. For example, a scammer might pretend to be a family member in distress, urgently asking for money.

Video Deepfakes: AI can create highly convincing fake videos, where a person’s face is swapped onto someone else’s body or manipulated to say things they never actually said. Scammers use these videos to impersonate business executives, government officials, or other authority figures, tricking people into authorizing fraudulent transactions or spreading false information.

AI-Generated Speech (Text-to-Speech Deepfakes) :Even without a real voice sample, AI can generate realistic speech from just a text input. This means scammers can create synthetic voices that sound natural and deliver convincing messages, making their deception even more effective.

As deepfake scams become more advanced, it's more important than ever to stay alert and verify information before taking action.

Real-World Cases of AI Deepfake Scams

The CEO Fraud Case: In 2019, a UK-based energy company fell victim to a shocking deepfake scam. The company’s CEO received a call from someone he believed to be his boss, instructing him to transfer a large sum of money to a supplier. The voice was so realistic that the CEO had no reason to doubt the request. Unfortunately, it was a sophisticated deepfake, and the scam resulted in a significant financial loss.

The Political Deepfake: In 2020, a deepfake video of Gabon’s president, Ali Bongo, appeared online, seemingly showing him delivering a national address. However, the video was completely fabricated. It caused widespread confusion and highlighted how deepfakes can be used to manipulate political narratives and spread misinformation.

The Family Emergency Scam: A particularly distressing case involved a mother in the United States who received a call from someone who sounded exactly like her daughter. The caller claimed to be in trouble and urgently needed money. Believing it was her child, the mother transferred the funds—only to later realize she had been deceived by a deepfake.

These cases show just how dangerous deepfake technology can be, with criminals using it to manipulate, defraud, and mislead people. As AI continues to advance, it's more important than ever to stay vigilant against these evolving threats.

Notable Cases of Deepfake Scams

The rise of deepfake scams has led to several high-profile incidents:

Several high-profile Italian business leaders, including Giorgio Armani and Patrizio Bertelli, recently fell victim to an advanced scam involving AI-generated voice cloning. Fraudsters used artificial intelligence to mimic the voice of Italy's defense minister, Guido Crosetto, and called the executives, claiming urgent financial help was needed to secure the release of kidnapped journalists. The elaborate scheme led to significant financial losses for those targeted.

In the UK, cybersecurity expert Jake Moore showcased just how easily AI voice cloning can be used for fraud. In an experiment, he cloned a friend's voice and managed to convince a company's finance director to transfer £250. His demonstration highlighted the alarming potential of this technology to enable financial scams.

Meanwhile, in the U.S., scammers launched a deepfake operation targeting Senator Ben Cardin. Using AI, they created a fake video call impersonating former Ukrainian Foreign Minister Dmytro Kuleba. The incident underscored the increasing sophistication of deepfake technology and its ability to fool even high-profile individuals, raising serious concerns about the growing threat of AI-driven scams.

The Role of Social Media in the Spread of Deepfake Scams

Social media platforms have played a significant role in the proliferation of deepfake scams. These platforms provide a fertile ground for the dissemination of deepfake content, allowing it to reach a wide audience quickly.

The Viral Spread of Deepfakes: Deepfake videos and audio clips can spread like wildfire on social media, making it easy for misinformation to take hold and cause widespread panic. In some cases, deepfakes have been used to sway public opinion or create confusion during crucial moments, like elections. Because social media moves so fast, once a deepfake is out there, it’s difficult to stop or debunk before it does real damage.

Exploiting Trust :Social media is also a goldmine for scammers who prey on people’s trust. A scammer might create a fake profile, pretending to be a well-known public figure—or even someone the victim knows personally. By gaining their target’s trust, they can start conversations, build a connection, and eventually use deepfake technology to pull off a convincing scam. This kind of deception makes these scams incredibly effective and dangerous.

How to Protect Against AI Deepfake Scams

As the threat of AI deepfake scams continues to grow, it is essential for individuals and businesses to take proactive steps to protect themselves. Here are some strategies to consider:

1. Educate and Train Employees :One of the most effective ways to protect against deepfake scams is to educate and train employees on how to recognize and respond to them. This includes raising awareness about the existence of deepfake technology and providing guidance on how to verify the authenticity of requests, especially those involving financial transactions.

2. Implement Multi-Factor Authentication (MFA): Multi-factor authentication (MFA) adds an extra layer of security by requiring users to provide two or more forms of verification before accessing an account or completing a transaction. This can help prevent unauthorized access, even if a scammer has successfully cloned a voice or video.

3. Use Digital Watermarking: Digital watermarking involves embedding a unique identifier into digital media, such as videos or audio files. This can help verify the authenticity of the media and detect any tampering or manipulation. Some companies are already using digital watermarking to protect their content from deepfake fraud.

4. Leverage AI Detection Tools: Just as AI is being used to create deepfakes, it can also be used to detect them. There are now AI-powered tools available that can analyze videos and audio files to identify signs of manipulation. These tools can be integrated into security systems to provide real-time detection of deepfake content.

5.Double-Check Requests Through Multiple Channels: If someone asks you to make a financial transaction or share sensitive information—especially if the request is unexpected or urgent—always verify it through a separate method. For instance, if you receive a phone call from someone claiming to be your boss, follow up with an email or call them back using a known and trusted number to confirm the request before taking any action.

6.Stay Updated on the Latest Scams: AI deepfake scams are constantly evolving, with scammers coming up with new tactics all the time. Staying informed about the latest threats can help you identify and avoid scams before they reach you. Keep an eye on trusted cybersecurity sources and stay updated on trends in deepfake technology to stay one step ahead of scammers.

Sources:

BBC News - The rise of 'deepfake' videos

Forbes - How AI-Generated Deepfakes Are Being Used To Scam Businesses

Wired - The Era of Deepfakes Is Here

The Verge - Deepfake scams are coming, and we’re not ready

MIT Technology Review - Deepfake technology is advancing, and it’s getting harder to detect

0 Comments