Technology is evolving at a breathtaking pace, and one of the most fascinating frontiers is the development of brain-computer interfaces (BCIs). Meta, formerly Facebook, has been making significant strides in this space, recently demonstrating an AI-driven system capable of decoding brain activity into text with unprecedented accuracy. While challenges remain, these advancements could fundamentally reshape communication, particularly for individuals with speech impairments.

This blog will explore Meta’s latest research, its implications, and how it compares with other developments in the field. We’ll also discuss the challenges, ethical concerns, and future prospects of non-invasive BCIs.

Meta’s Progress in Brain-Computer Interfaces

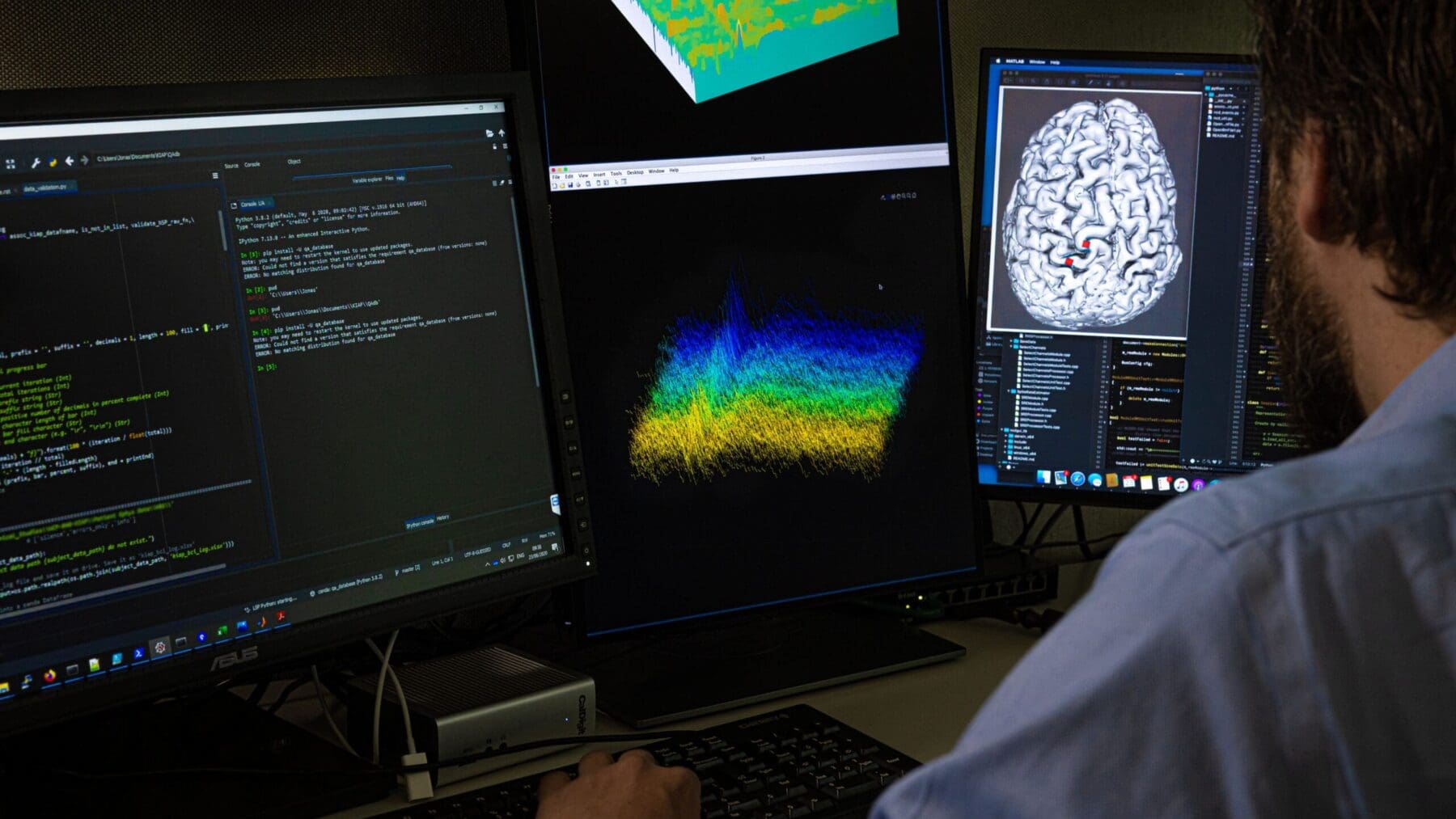

Meta’s Fundamental AI Research (FAIR) lab, in collaboration with international researchers, has developed an AI model capable of translating brain signals into text. This study involved 35 healthy participants whose brain activity was recorded using two techniques:

Magnetoencephalography (MEG): A high-resolution brain imaging technique that measures magnetic fields generated by neural activity.

Electroencephalography (EEG): A more accessible method that records electrical activity in the brain using electrodes placed on the scalp.

Participants typed sentences while their brain activity was recorded, allowing the AI system to learn patterns associated with different characters and words. The system, built with a three-part architecture including an image encoder, a brain encoder, and an image decoder—was able to reconstruct up to 80% of typed characters, meaning full sentences could be generated from brain signals with remarkable accuracy.

Implications for Communication and Accessibility

This breakthrough holds immense potential for individuals with severe communication impairments, such as those with ALS, locked-in syndrome, or stroke-induced speech loss. Traditional assistive technologies rely on eye tracking or slow text-based interfaces, but a non-invasive BCI could allow users to “think” their words and have them appear as text in real time.

While invasive BCIs like Neuralink and Synchron have shown even higher accuracy (>90%) through implanted electrodes, Meta’s non-invasive approach is groundbreaking in its accessibility. Avoiding brain surgery significantly lowers the barrier to entry, making this technology feasible for a wider population.

Challenges in Practical Implementation

Despite its promise, there are significant obstacles to bringing Meta’s technology to everyday use:

1. Hardware Limitations: MEG scanners, while highly accurate, are large, expensive, and require controlled environments. This makes them impractical for consumer applications. EEG, though more portable, suffers from lower spatial resolution and higher noise interference, reducing its accuracy.

2. Accuracy and Generalization: While achieving up to 80% accuracy is impressive, it likely applies to constrained scenarios—such as recognizing characters from a limited set—rather than full, free-flowing conversations. Generalizing across different individuals, brain structures, and thought patterns remains a challenge.

3. Privacy and Ethical Concerns :Reading brain activity raises serious privacy concerns. If BCIs become widespread, how do we ensure that users’ thoughts aren’t accessed or decoded without consent? Could corporations or governments misuse this technology for surveillance or data mining? Ethical frameworks and regulations will need to evolve alongside these advancements to protect mental privacy.

4. Regulatory Hurdles: For clinical applications, BCIs must go through rigorous testing and obtain FDA or equivalent regulatory approval. Given the novelty of this field, it may take years before non-invasive BCIs are deemed reliable and safe for everyday use.

How Meta’s Work Compares to Other Research

Meta is not alone in the race to decode brain activity into text and speech. Several academic and corporate research teams are exploring different approaches:

University of Texas at Austin (2023): Used fMRI scans combined with GPT-based AI models to decode continuous language from brain activity, achieving ~50% accuracy on semantic tasks.

Stanford University (2023): Developed an invasive BCI that enabled a paralyzed patient to communicate at a speed of 62 words per minute using implanted electrodes.

Google & UCSF (2022): Worked with electrocorticography (ECoG) arrays to decode speech with a 68% word error rate.

While invasive methods currently offer higher accuracy, Meta’s focus on non-invasive techniques makes it unique and potentially more scalable in the long run.

Meta’s Open-Source Contributions and AI Innovations

One of the most exciting aspects of Meta’s research is its commitment to open-source development. By releasing datasets and AI models (such as Ego4D), Meta aims to advance community-driven BCI research, allowing other teams to build upon their findings.

Key innovations in Meta’s research include: Contrastive Learning: A technique that maps brain signals to latent text embeddings, improving decoding efficiency.

Large Language Models (LLMs): The use of AI models like Llama for context-aware decoding, making reconstructed text more coherent and readable.

Self-Supervised Learning: Enabling generalization across different users, reducing the need for individual calibration.

How Meta’s BCI Compares to Other Technologies

BCI research has been a hot topic in neuroscience and AI, with various companies and institutions working to decode brain activity into readable signals. While Meta’s non-invasive approach is groundbreaking, it is worth comparing it to other existing technologies:

Invasive BCIs (Neuralink, Synchron, Stanford University): Companies like Neuralink and research teams at Stanford University have developed invasive BCI systems that use implanted electrodes to record neural activity. These systems offer much higher accuracy, with Neuralink and Stanford achieving >90% accuracy in speech decoding. Stanford’s 2023 study enabled a paralyzed patient to communicate at 62 words per minute using an implanted electrode array. However, the need for brain surgery makes these solutions less accessible.

Functional MRI (fMRI) Approaches (University of Texas, 2023): Researchers at the University of Texas developed a BCI using fMRI scans and AI models like GPT. This system achieved around 50% accuracy in decoding continuous language from brain activity. While fMRI provides excellent spatial resolution, it is slow and impractical for real-time applications.

Electrocorticography (ECoG) Studies (Google & UCSF, 2022): Google and UCSF explored ECoG-based BCIs, which involve placing electrodes directly on the brain’s surface. Their system had a 68% word error rate, which is promising but still requires improvement.

Meta’s approach stands out because it offers a non-invasive method that achieves impressive results without requiring surgery. While the accuracy is not yet on par with invasive methods, it is a promising step towards making BCIs more accessible and practical.

The Ethical Debate: Who Controls Our Thoughts?

As with any breakthrough technology, BCIs come with ethical dilemmas. The ability to read and interpret brain signals blurs the line between privacy and accessibility. Who should have access to our brain data? Can thoughts be stolen, manipulated, or misused?

Meta, like other tech giants, has faced scrutiny over data privacy concerns in the past. The introduction of BCIs raises an entirely new level of ethical considerations. To address these issues, strong regulations and transparency in AI development will be essential. Users must have full control over their neural data, with robust safeguards against unauthorized access or exploitation.

The Role of AI in Brain Signal Decoding

One of the key innovations in Meta’s research is the use of artificial intelligence to improve decoding accuracy. Their system employs contrastive learning to map brain signals onto latent text representations, allowing for more effective translation of thoughts into text. Additionally, by leveraging large language models (LLMs) like Llama, Meta’s BCI system can enhance coherence by predicting likely word sequences based on context. This is a crucial advancement, as raw brain signal data is often noisy and difficult to interpret without AI assistance.

Furthermore, Meta’s research has explored self-supervised learning to improve generalization across individuals. Typically, BCI systems require extensive per-user calibration, making them less practical for real-world applications. By incorporating self-supervised learning, Meta has reduced the need for individual tuning, bringing us one step closer to a plug-and-play BCI solution.

0 Comments