Introduction

The future of robotics is no longer confined to science fiction. By 2025, humanoid robots could become as commonplace in warehouses and hospitals as smartphones are today, thanks to breakthroughs in AI computing. At the heart of this revolution is Nvidia’s Jetson Thor, a powerhouse chip designed explicitly for humanoid systems. With unprecedented performance and a robust ecosystem, Jetson Thor isn’t just another piece of hardware—it’s the catalyst for a new era of intelligent machines. In this deep dive, we’ll explore how Nvidia’s latest innovation is poised to redefine robotics, the industries it will transform, and the challenges it must overcome.

The Rise of Humanoid Robotics and AI’s Role

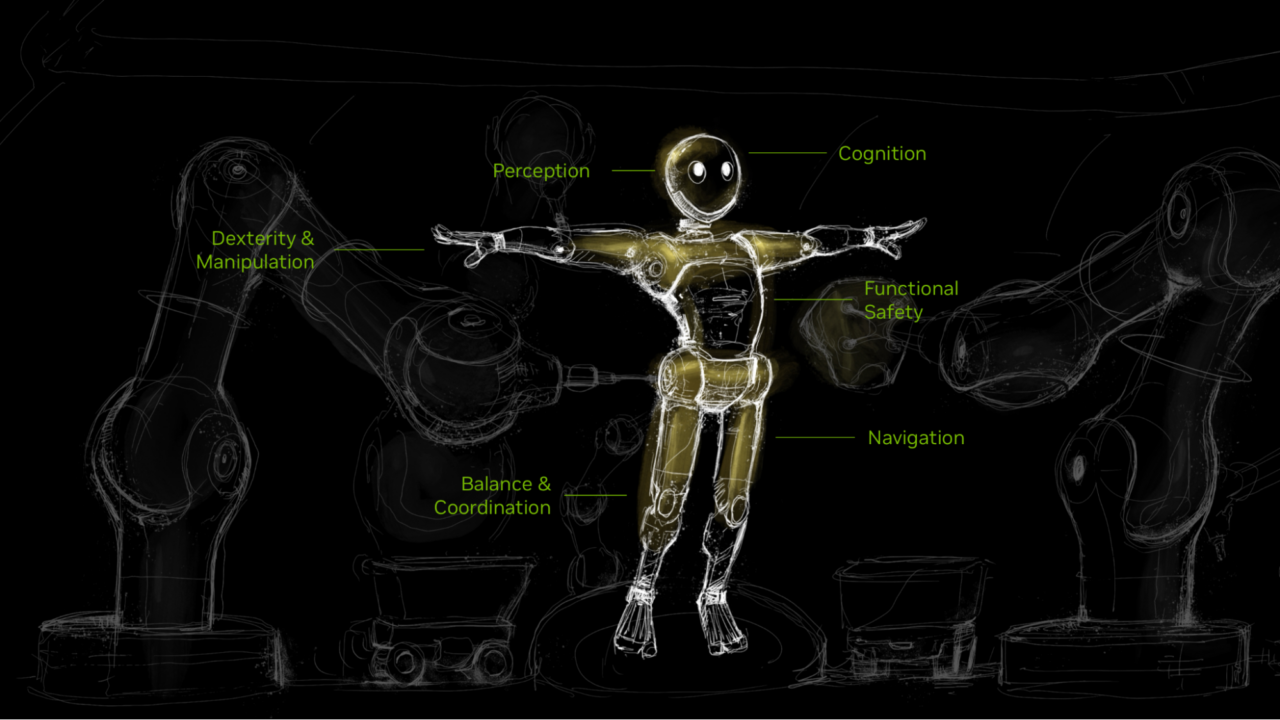

Humanoid robots machines designed to mimic human movement and interaction—have long been a holy grail for engineers. From Honda’s ASIMO to Boston Dynamics’ Atlas, these robots have historically struggled with limitations in real-time decision-making, energy efficiency, and adaptability. The missing link? AI compute power.

Traditional robots rely on pre-programmed tasks or cloud-based processing, leading to latency and dependency on internet connectivity. Enter edge computing: the ability to process data locally, on the device itself. Nvidia, a leader in AI hardware, recognized this gap early. Their Jetson series has powered drones, delivery bots, and industrial arms for years. But humanoids demand far more: real-time sensor processing, contextual reasoning, and seamless human interaction.

Jetson Thor, announced for release in early 2025, is Nvidia’s answer. Let’s unpack why this platform is a game-changer.

Unveiling Nvidia Jetson Thor: A Technical Marvel

1. Performance: 2000 TOPS and Beyond

Jetson Thor delivers a staggering 2000 TOPS (Tera Operations Per Second) of AI performance, dwarfing its predecessor, Jetson Orin (275 TOPS). To put this in perspective, Thor’s compute power is equivalent to processing 200 trillion neural network operations every second—enough to run multiple 8K video analyses simultaneously.

This leap is powered by Nvidia’s Blackwell architecture, the successor to Hopper. Blackwell optimizes tensor operations (the backbone of AI models) and introduces advanced memory management, reducing bottlenecks during complex tasks like training LLMs or rendering 3D environments.

2. Architectural Innovations: Grace CPU + Ada Lovelace GPU

Thor combines two specialized processors:

Grace CPU: Named after computing pioneer Grace Hopper, this ARM-based CPU excels at sequential processing, handling tasks like path planning and system control.

Ada Lovelace GPU: Optimized for parallel processing, this GPU accelerates AI workloads, from computer vision to generative AI.

The synergy between these chips allows Thor to balance precision and speed. For example, while the GPU processes live camera feeds to detect obstacles, the CPU calculates the safest path in real time.

3. Transformer Engine: Supercharging Generative AI

A standout feature is Thor’s Transformer Engine, designed to optimize transformer-based models (like GPT-4 or Google’s BERT). These models underpin modern AI’s ability to understand context—critical for robots interpreting ambiguous commands like, “Hand me the tool next to the red box.” The engine dynamically adjusts precision (FP8/FP16) during inference, boosting speed without sacrificing accuracy.

4. Energy Efficiency: Powering All-Day Operations

Despite its prowess, Thor sips power. Nvidia claims it’s 50% more efficient than Orin, thanks to TSMC’s 4nm fabrication process and software optimizations. For humanoids like 1X’s Eve, which operate for 8+ hours on a single charge, this efficiency is non-negotiable

Transforming Humanoid Robotics Applications

1. Autonomy: Navigating Complex Environments

Imagine a robot maneuvering through a cluttered hospital corridor, avoiding gurneys and humans. Thor enables this via:

SLAM (Simultaneous Localization and Mapping): Real-time 3D mapping using LiDAR and stereo cameras.

Sensor Fusion: Combining data from infrared, ultrasonic, and inertial sensors to “see” through obstacles like smoke or darkness.

Companies like Agility Robotics are leveraging Thor for their bipedal robot Digit, designed for last-mile delivery in uneven terrains.

2. Human Interaction: Beyond Voice Commands

Thor’s multimodal AI processes voice, gaze, and gestures. At GTC 2024, Nvidia demoed a Thor-powered robot that:

- Recognized a technician’s frustrated tone and offered troubleshooting steps.

- Detected a fallen object using vision and retrieved it without explicit instructions.

This contextual awareness stems from Thor’s ability to run vision-language models (VLMs) like Nvidia’s own NeMo.

3. Safety: Built for Real-World Demands

Industrial robots require fail-safes. Thor integrates ISO 13849 (machinery safety) standards, featuring:

Redundant compute paths: If one processor fails, another takes over instantly.

Real-time monitoring: Detecting overheating or voltage fluctuations to prevent malfunctions.

Nvidia’s Ecosystem and Strategic Partnerships

1. Isaac Platform: A Full-Stack Toolkit

Developers using Thor gain access to:

Isaac Sim: A virtual testing environment built on Omniverse. Engineers can simulate robots in digital twins of Amazon warehouses or SpaceX factories, slashing real-world testing costs.

Isaac ROS: Pre-trained models for object recognition, gesture control, and more.

2. Collaborations Driving Innovation

Nvidia’s partners are already prototyping with Thor:

Figure AI: Testing humanoids for automotive assembly lines.

Boston Dynamics: Enhancing Atlas’s parkour skills with Thor’s generative AI.

Medtronic: Developing surgical assistants that guide surgeons via AR overlays.

Market Context: How Thor Stacks Up Against Competitors

While Qualcomm’s RB6 (100 TOPS) and Intel’s Agilex FPGAs target basic drones, Thor’s 2000 TOPS and Isaac tools give Nvidia an edge. However, startups like Graphcore argue their IPUs (Intelligence Processing Units) offer better cost-per-TOPS for niche applications.

Yet, Nvidia’s ace is CUDA: a developer ecosystem spanning 4 million programmers. As Jim Fan, Nvidia’s AI scientist, noted: “Hardware is nothing without the layers of software that make it accessible.”

Industry Impact: From Factories to Your Living Room

Logistics: Walmart plans Thor-powered robots for inventory management, reducing reliance on human workers in hazardous freezer sections.

Healthcare: Robots like Toyota’s HSR could fetch supplies or monitor patients’ vitals autonomously.

Consumer: Imagine a household robot that cooks, cleans, and tutors kids—all while learning your preferences.

GTC 2024 Insights: Embodied AI Takes Center Stage

At GTC, Nvidia CEO Jensen Huang unveiled “Project GR00T,” a Thor-powered initiative for general-purpose humanoids. GR00T robots learn by observing humans—think a robot mastering espresso-making by watching a barista. Early partners include Tesla (Optimus) and Sanctuary AI.

Challenges: The Roadblocks Ahead

Cost: Thor’s premium pricing (~$5,000 per module) may deter startups.

Complexity: Smaller teams may lack expertise to optimize models for Blackwell.

Nvidia counters with Jetson Thor Nano, a scaled-down variant, and free training via its Deep Learning Institute.

Jetson Thor isn’t just a chip—it’s the cornerstone of a future where robots coexist with humans as collaborators. While hurdles remain, Nvidia’s blend of hardware excellence and software agility positions Thor as the linchpin of this transition. As we approach 2025, one thing is clear: the age of intelligent humanoids is no longer a question of “if,” but “how soon.”

0 Comments