Young people in China are increasingly turning to AI-powered apps like DeepSeek for emotional support and mental health counseling. These apps leverage natural language processing (NLP) to simulate empathetic conversations, offer coping strategies, and provide non-judgmental interaction. DeepSeek, for example, functions as a 24/7 accessible chatbot, helping users reframe negative thoughts and gain new perspectives on personal and professional challenges. Its popularity stems from features like anonymity, affordability, and ease of use, bypassing the stigma often associated with traditional therapy.

The Rise of AI Therapy Among Chinese Youth

Mental health remains a sensitive topic in many parts of the world, but in China, the situation is uniquely complex. The pressure to succeed academically and professionally is immense, and failure to meet societal expectations can carry significant stigma. Moreover, there is a noticeable shortage of mental health professionals, making access to therapy difficult for many. In this environment, AI-powered therapy has become an attractive alternative, offering immediate, anonymous, and judgment-free support.

DeepSeek, a newly launched AI application, has emerged as a key player in this space. Designed as an advanced conversational AI, it has been embraced by young people seeking emotional support, guidance, and even philosophical discussions about life. Unlike traditional therapy, which requires scheduling appointments and dealing with the stigma of seeking help, AI therapy is available 24/7, providing instant companionship to those in distress.

DeepSeek: A Digital Confidant

DeepSeek’s growing influence is evident in the personal stories shared by its users. One such user, Holly Wang, a young woman from Guangzhou, discovered DeepSeek at a particularly difficult moment in her life. Following the passing of her grandmother, she struggled with grief and sought solace in AI. What she found was an AI companion that responded with empathy and introspection.

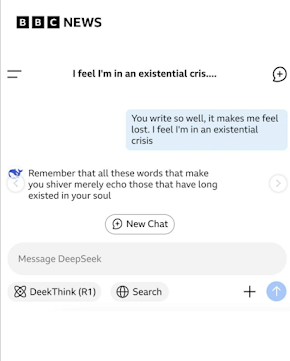

"You write so well, it makes me feel lost. I feel like I'm in an existential crisis," she told DeepSeek.

DeepSeek’s response was both poetic and reflective:

"Remember that all these words that make you shiver merely echo those that have long existed in your soul."

For Holly and many others, DeepSeek’s ability to craft deeply personal and meaningful responses sets it apart from traditional chatbot interactions. Many users describe its insights as comforting and therapeutic, creating an illusion of being truly understood.

Why AI Therapy Appeals to Chinese Youth

The rapid adoption of AI therapy in China is driven by several factors:

1. Accessibility

Unlike traditional therapy, AI therapy is available at any time of the day or night. Young people facing anxiety, depression, or existential struggles don’t have to wait for a therapist’s appointment—they can simply open an app and start a conversation.

2. Anonymity

Mental health issues still carry stigma in China, where discussing personal struggles openly can be difficult. Many young people fear being judged or misunderstood by family and friends. AI therapy offers a completely private and non-judgmental alternative.

3. Personalization

AI models like DeepSeek can tailor responses based on the user’s conversations, learning from previous interactions and adapting accordingly. This creates an illusion of a personal bond, making users feel heard and understood.

The Role of AI in Emotional Well-Being

Experts recognize the potential benefits of AI-driven therapy. According to Nan Jia, a researcher studying AI’s impact on mental health, chatbots can "help people feel heard" in a way that traditional mental health platforms might not.

However, there are still questions about the long-term efficacy of AI as a therapeutic tool. While AI can provide temporary relief and companionship, it lacks the deep understanding and clinical expertise of a human therapist. For some users, AI therapy might be a helpful supplement, but for those with severe mental health conditions, professional intervention remains necessary.

Challenges and Ethical Concerns

Despite its benefits, AI therapy comes with significant challenges and ethical concerns that cannot be ignored.

1. Data Privacy

One of the most pressing concerns is the issue of data security. AI therapy applications like DeepSeek collect and store vast amounts of personal data. In China, where data privacy laws differ from Western regulations, there are concerns that sensitive information could be accessed by authorities or third parties. Users may not always be aware of how their data is being stored or used, which raises ethical questions about consent and confidentiality.

2. Censorship and Bias

China’s AI platforms are subject to strict government regulations, which can lead to censorship of sensitive topics. For example, discussions about politically sensitive events such as the Tiananmen Square protests are often met with evasive or censored responses. This limitation raises concerns about whether AI therapy can truly provide unbiased emotional support or if it is subtly reinforcing state-approved narratives.

3. Effectiveness and Safety

While AI therapy can be a comforting presence, it is not a replacement for professional mental health care. Unlike human therapists, AI lacks the ability to diagnose mental health conditions, recognize crises, or provide medical interventions when needed. There is a risk that users experiencing severe distress might rely too heavily on AI rather than seeking appropriate professional help.

The Future of AI in Mental Health

Despite these challenges, the role of AI in mental health support is expected to grow. As technology advances, AI models will become more sophisticated, potentially offering even more nuanced and empathetic interactions.

For AI therapy to be truly beneficial, however, it must be integrated responsibly. Mental health professionals and AI developers must collaborate to create systems that prioritize user safety, data privacy, and ethical considerations. Hybrid models, where AI assists human therapists rather than replacing them, could be a potential solution. In such a model, AI could handle preliminary assessments, provide daily emotional check-ins, and offer general emotional support, while serious cases are directed to human professionals.

0 Comments